Demands for efficiency and sustainability influence developments in all areas of society. Intelligent light management systems are increasingly being used in public spaces in order to reduce energy consumption and increase user comfort. The key to this is the intelligent networking of luminaires, sensors and operating elements. Sensor-linked lighting systems are intended to detect the presence of persons in order to control the lighting in a situation-dependent or presence-dependent manner.

Up to now, passive infrared sensors (PIR) have been used for the detection of presence in the lighting industry. However, this method is insufficient for use in an intelligent luminaire as the user must always be in motion to activate the system. Conventionally available video sensors on the other hand, consisting of a CMOS image sensor and a fisheye lens, are neither qualified due to high system costs and their large hemispherical design (ca. Ø 120 mm x H 50 mm). In contrast, the use of multiaperture-lenses in InnoSYS is mainly aimed at achieving a highly miniaturized, ultraflat design with a discreet appearance. In addition, multiaperture-objectives in high-volume production have a low unit price due to cost-efficient production on wafer-scale.

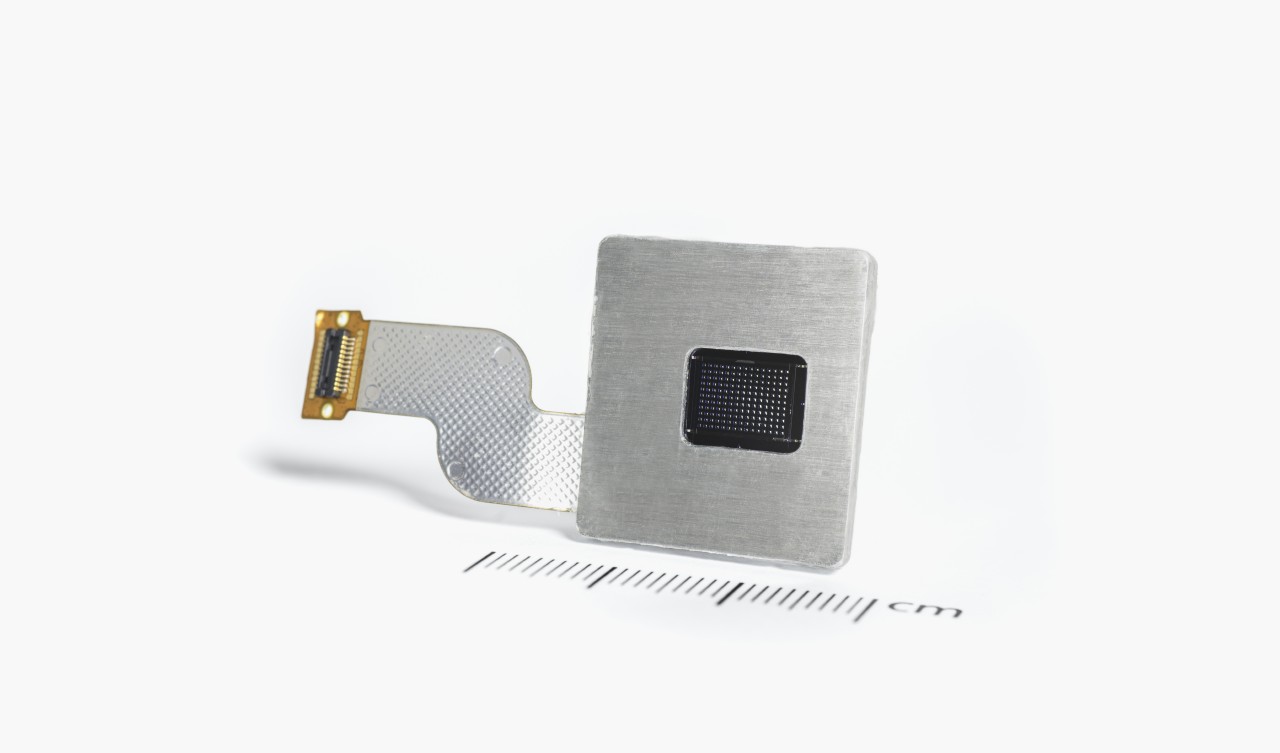

The implemented demonstrator consists of a double-sided microlens-array, cast on a pre-patterned diaphragm-array wafer, and a crosstalk-module, meaning a channel-separating perforated plate for suppressing crosstalk. Both components are aligned in front of a CMOS sensor. The manufacturing of the microoptical single-master molds of the microlens freeform-arrays was realized by means of LED-grayscale lithography (shape deviation < 100 nm). With 15 x 11 channels and an extremely short focal length (< 0.7 mm), a maximum field of view of 110° is detected with f-number F# 3. Every channel of the multiaperture-objective holds a different viewing direction and therefore transmits different areas of the entire object field. Even at the edge of the picture, high spatial frequencies (up to 110 LP/mm) are resolved with low image distortion (< 6 %). With the help of a self-developed software, the partial images can be reassembled, including vignetting and distortion correction.

The presented project is funded by the German Federal Ministry of Research, Technology and Space (BMFTR; formerly German Federal Ministry of Education and Research (BMBF)) within the framework of the research project InnoSYS (FKZ: 16ES0268).

Authors: Christin Gassner, Jens Dunkel, Alexander Oberdörster, Sylke Kleine, Andreas Brückner