Robot assistant “3D-Kosyma” learns to understand human gestures

A robot assistant co-developed at Fraunhofer IOF will soon allow interaction between humans and machines based on human gestures. The “3D-Kosyma” system is designed to be used in automotive manufacturing, among other areas, where it will establish new standards in quality assurance.

A robot interprets human movement using a 3D sensor

Peter Kühmstedt is standing in his laboratory at Fraunhofer IOF in Jena, next to him is an unprocessed component of a car body: a car door. It has an imperfection which is barely visible to the human eye - its surface is deformed by a dent. Peter Kühmstedt, department head for imaging and sensing at the institute, points his finger at the spot where he suspects the damage to be in the outer skin of the door. A nearby robot with 3D sensors then comes to life. It recognizes the position of the researcher's finger and follows the indicated direction.

The complex measuring system begins its investigation and performs 3D measurements at the point in question. The measuring system then processes the data captured by the sensor and compares it with defect-free surfaces to determine the size and scope of the defect on the component.

And indeed: the system finds the dent and marks the area where the damage is located with a light signal. This is “3D-Kosyma“ - a mobile, robotic inspection system. It can be used in technical production and maintenance environments based on human-machine interaction.

Making inspection systems for quality assurance simpler and more efficient

The new robotic assistant has one main goal: to make test measurements, as they are necessary in any industrial production, simpler and more efficient, thus promoting quality assurance in industry in the long term.

“Our aim was to implement the lowest possible threshold for interaction between humans and robots in quality assurance,” says Dr. Peter Kühmstedt, describing the project's approach. Project manager Dr. Tilo Lilienblum from the cooperation partner INB Vision AG adds: “Quite intuitively, a person can point to a part. The robot follows the gesture and measures the part.”

Development completed, talks with industrial partners underway

Now that the first development phase of the robot assistant has been completed, the system is intended to find its way into industry. To this end, talks are currently being held with different business partners. A suitable opportunity for this arose last October: The Automotive Cluster East Germany and the BMW plant in Leipzig invited to a technology pitch in Leipzig. Among them were representatives of the “3D-Kosyma“ project.

Their presentation was met with a positive response, as a result of which BMW is now holding talks with the project team about possible application scenarios in the automotive production. Detailed coordination with regard to an inspection system - tailored to the needs of the plant - is planned for the near future.

Fraunhofer IOF and INB Vision pool their expertise in 3D detection

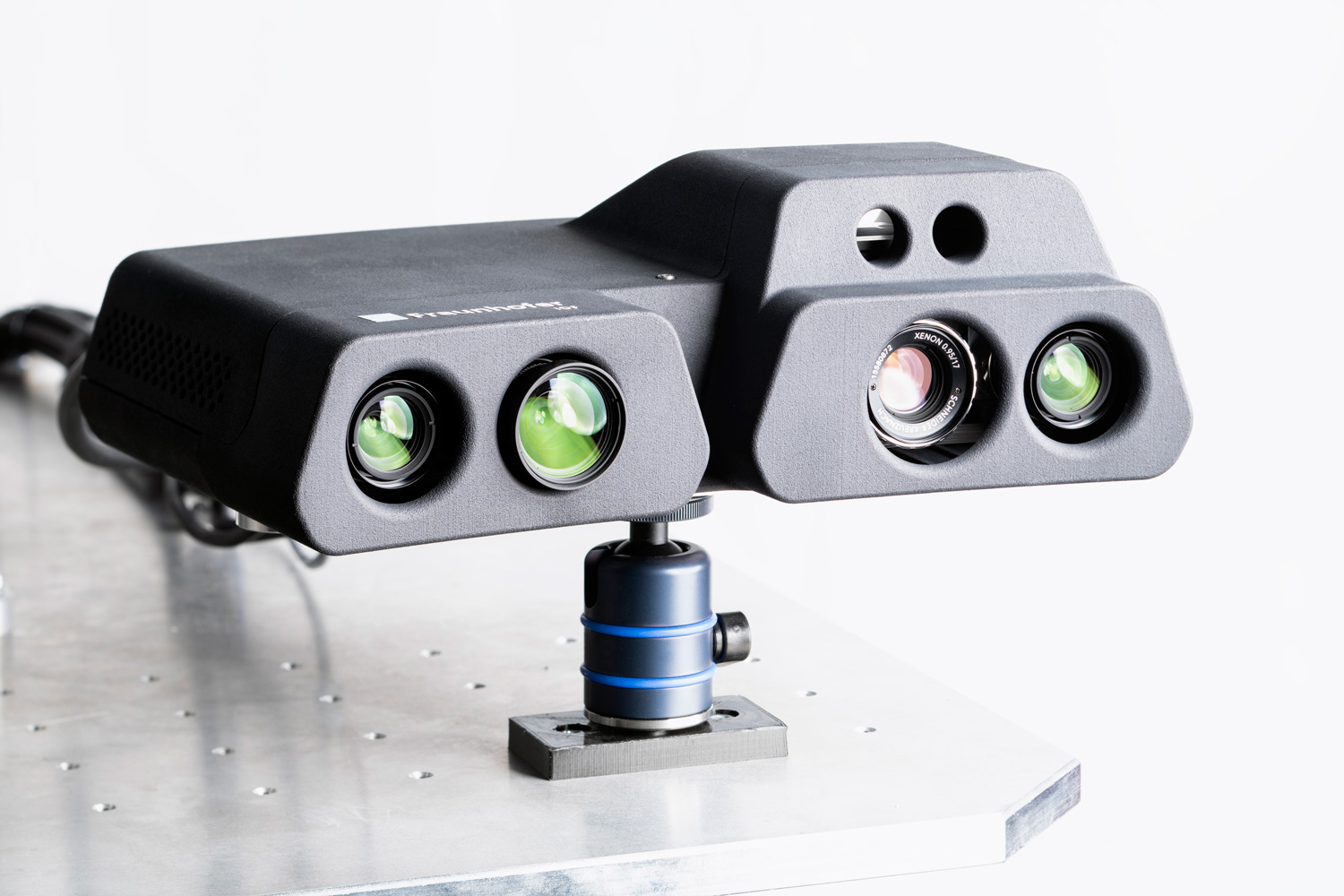

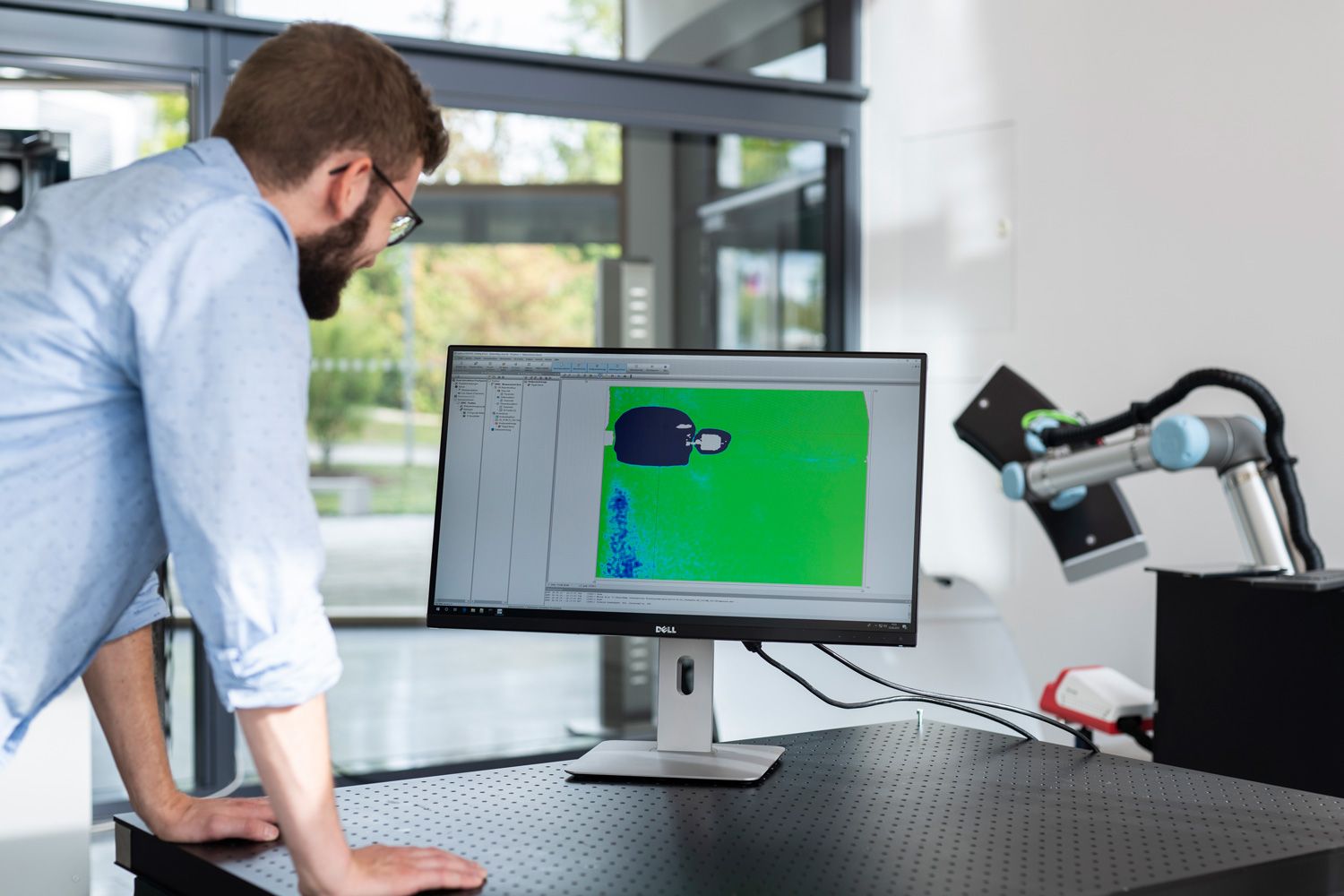

The robot assistant consists of several components: Fraunhofer IOF contributes its expertise in 3D detection of large-scale measurement scenarios to the novel system. A 3D sensor, developed at Fraunhofer IOF, monitors the presented scene and registers both - a person’s gestures and the (building) parts on display. As soon as the robot detects via this 3D sensor that someone is pointing at an object, a separate robot arm with a second 3D sensor from the company INB Vision AG approaches this component.

The second 3D sensor then takes over the inspection process. In this way, the 3D surface of the component can be precisely measured. With the obtained information, the system can detect and visually indicate the smallest local deviations from the desired surface shape - the stored target data. An employee is thus alerted to the deviation and can see on a separate monitor how serious the defect is. They can then make a decision on how to proceed with the manufacturing process.

The aspiration of the “3D-Kosyma” project is to develop a collaborative mobile inspection system that supports human-machine interaction in 3D quality assurance. The system is currently being enhanced primarily for processes in the automotive industry but can also be used in other areas of mechanical and plant engineering. Wherever an undefined situation arises in manufacturing, the system can translate gestures or signals from humans into a suitable action by the robot.

In addition to Fraunhofer IOF, the Fraunhofer Institute for Factory Operation and Automation IFF in Magdeburg, INB Vision AG Magdeburg, the software developers 3plusplus GmbH from Suhl and the Berlin innovation consultants Gitta GmbH are involved in the project.

Background: Research project “3Dsensation“

“3D-Kosyma” is part of the research project “3Dsensation” - one of ten consortia from the funding program “Zwanzig20 - Partnerschaft für Innovation” (2020 - Partnership for Innovation) of the German Federal Ministry of Education and Research.

The alliance partners of “3Dsensation” pursue the goal of enabling machines to visually capture and interpret complex scenarios through innovative 3D technologies. The consortium is working on the foundations for safe and efficient interaction between humans, machines and the environment in the essential living and working areas of production, health and mobility.